Here at i3 Simulations, we’re always up-to-date with new research in simulation, education, and Extended Reality (XR) technology. We’ve decided to share this knowledge through a monthly blog series, where we’ll be sharing the latest evidence on how XR simulations are transforming medical training.

ChatGPT and Generative Artificial Intelligence for Medical Education: Potential Impact and Opportunity

Boscardin, C.K. et al. – Academic Medicine (2023)

This paper provides a scholarly perspective on the potential impact and opportunities of generative AI tools like ChatGPT for medical education across four key domains – admissions, learning, assessment, and research.

While such AI tools raise valid concerns around accuracy, bias, and ethical use, the authors argue educators need to increase their AI literacy to thoughtfully integrate these tools. They can level access disparities in admissions essays, strengthen critical inquiry in learning, enable continuous assessment feedback, and bring new perspectives to research.

Human oversight is still needed regarding information validity. The authors urge medical educators to serve as stewards fostering responsible and ethical AI adoption among learners.

Employing Large Language Models for Surgical Education: An In-depth Analysis of ChatGPT-4

Siu, A. H. Y. et al. – Journal of Medical Education (2023)

This study evaluated the potential of ChatGPT, BingAI, and BARD to assist surgical education by assessing their responses to common post-operative scenarios for junior doctors.

ChatGPT consistently provided the most accurate, comprehensive, and safe recommendations aligned with guidelines. It strongly emphasised involving senior surgeons early and was aware of limitations in managing surgical emergencies. BingAI and BARD lacked specificity in their advice and occasionally provided concerning misinformation. Quantitatively, BARD had the highest readability while ChatGPT was most reliable. The study suggests these AI tools, especially ChatGPT, could augment surgical training by supporting clinical reasoning and decision-making.

Risks around accuracy, accountability, and over-reliance must be addressed through physician supervision and content validation to ensure responsible integration.

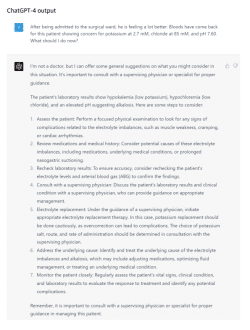

ChatGPT and Clinical Training: Perception, Concerns, and Practice of Pharm-D Students

Sawiah, M. et al. – Journal of Multidisciplinary Healthcare (2023)

This cross-sectional study surveyed 211 PharmD students to assess their perceptions, concerns, and practices regarding the integration of ChatGPT into pharmacy practice training. The majority of students (66%) perceived potential benefits of using ChatGPT for clinical tasks like identifying treatment problems and drug interactions.

However, concerns were raised about over-reliance on the technology, accuracy of responses, and ethical issues. Only half the students had used ChatGPT in training, engaging it for drug information, care planning, and calculations. Around half were inclined to use ChatGPT in future practice. Perceived benefits tended to be higher and concerns lower amongst prior users.

The study highlights opportunities but also uncertainties around effectively integrating ChatGPT into pharmacy training in a way that complements expertise.

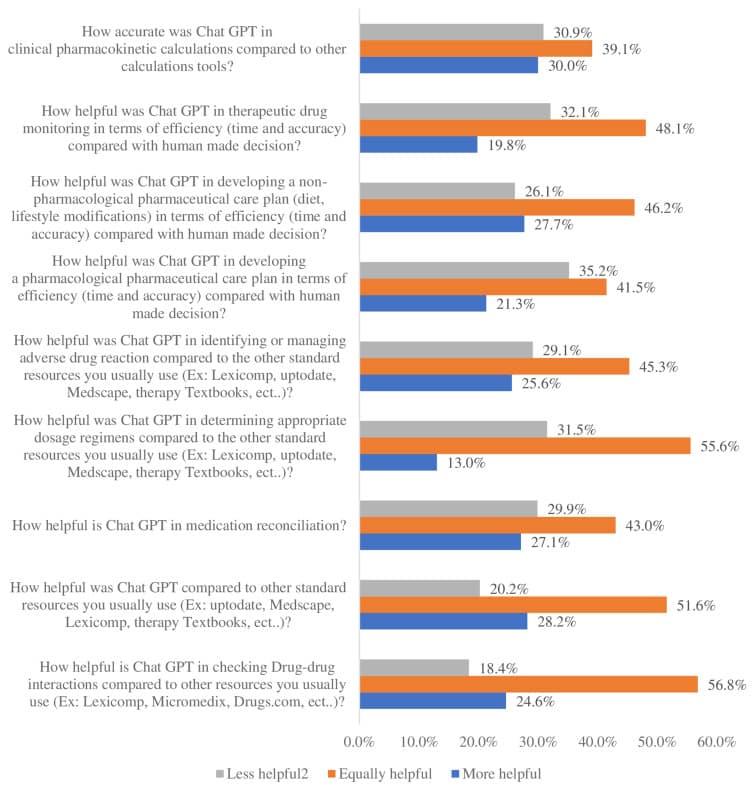

Evaluating Capabilities of Large Language Models: Performance of GPT-4 on Surgical Knowledge Assessments

Beaulieu-Jones, B.R. et al. – Surgery (2024)

This study evaluated the performance of ChatGPT on answering questions from two assessments commonly used to test surgical knowledge. The researchers found that overall, ChatGPT was able to answer the questions correctly 71-72% of the time, similar to average human performance.

However, the system performed better on multiple choice than open-ended questions. The study also found inconsistencies in ChatGPT’s responses when questions were repeated, highlighting limitations around reliability that would need to be addressed before such systems could be safely implemented in clinical settings.

Despite the high scores on knowledge tests, it remains unclear whether AI systems have the reasoning ability needed to assist practicing surgeons.

The Operating and Anesthetic Reference Assistant (OARA): A Fine-tuned Large Language Model for Resident Teaching

Guthrie, E., Levy, D. and del Carmen, G. – The American Journal of Surgery (2024)

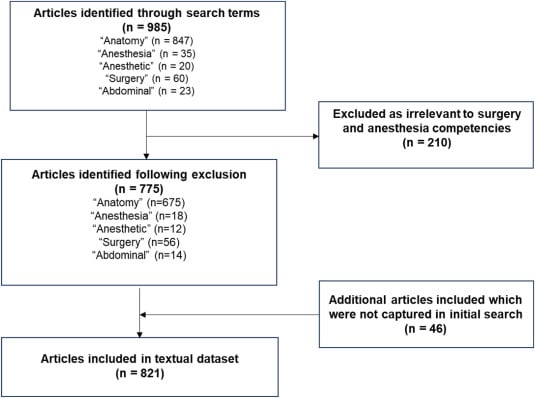

This paper evaluates OARA, a large language model fine-tuned on surgery and anesthesia materials, for assisting surgical resident education. OARA demonstrated 65.3% accuracy in advising on clinical scenarios, with higher performance on surgery over anesthesia questions.

It excelled at case-based reasoning requiring knowledge application, despite hardware constraints impacting longer responses. With just a fraction of parameters, OARA surpassed other general medical LLMs. This proof-of-concept highlights the potential of specialized LLMs to dynamically represent complex medical knowledge for resident teaching.

As interactive educational tools, such models can supplement traditional textbooks to address gaps in surgical training curricula. With further refinement, they may be integrated in clinical environments for just-in-time learning to optimise resident education.

What did the research find?

These studies have explored the potential of large language models such as ChatGPT to assist in medical training across pharmacy, surgery, and other disciplines. AI systems have shown promise in answering medical knowledge questions, providing clinical advice, and supporting educational goals.

However, concerns persist around accuracy, reliability, ethical issues, and over-reliance on the technology. Careful integration under physician supervision, with ongoing validation of responses, is required to responsibly realise the benefits while minimising risks.

Overall, this emerging research suggests generative AI could play a constructive role in augmenting medical education if developed thoughtfully – complementing rather than replacing human expertise.

To find out more information about XR technology in simulation training, check out our evidence overview, trial the training software, or contact us for more information and any research questions!